REF:

- https://ml-cheatsheet.readthedocs.io/en/latest/logistic_regression.html#introduction (Logistic regreation with python code )

- https://intellipaat.com/community/10666/why-the-cost-function-of-logistic-regression-has-a-logarithmic-expression

- https://www.coursera.org/learn/machine-learning/lecture/1XG8G/cost-function

- https://ml-cheatsheet.readthedocs.io/en/latest/logistic_regression.html#introduction

- https://peterroelants.github.io/posts/cross-entropy-logistic/ (VVI)

- https://www.youtube.com/watch?v=MztgenIfGgM (VVI)

- https://stats.stackexchange.com/questions/278771/how-is-the-cost-function-from-logistic-regression-derivated (VVVI derived cost function to gradient)

- https://www.geeksforgeeks.org/understanding-logistic-regression/ (Logistic regreation with python code )

- https://towardsdatascience.com/building-a-logistic-regression-in-python-301d27367c24 (logistic regration with code and data)

- https://ml-cheatsheet.readthedocs.io/en/latest/loss_functions.html#loss-cross-entropy (description about cross entropy loss)

- https://teddykoker.com/2019/06/multi-class-classification-with-logistic-regression-in-python/ (code multi class logistic regression python code ************* VVVI for multi class code )

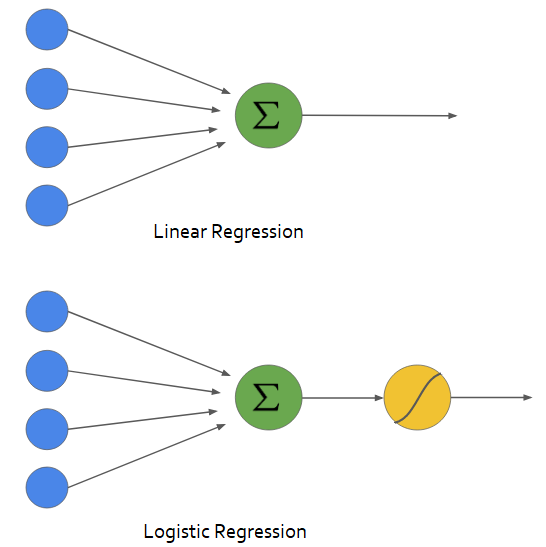

Example : linear regression and logistic regression

Logistic Regression practice:

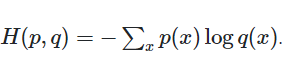

Cross-Entropy

Cross-entropy loss, or log loss, measures the performance of a classification model whose output is a probability value between 0 and 1Cross entropy is a measure of how different 2 probability distributions are to each other. If p and q are discrete we have :

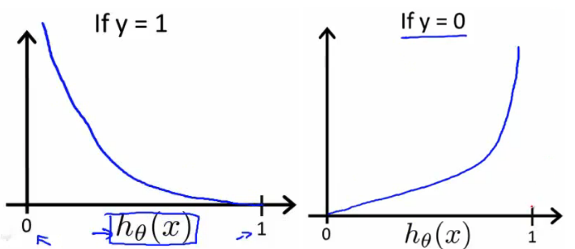

The benefits of taking the logarithm reveal themselves when you look at the cost function graphs for y=1 and y=0. These smooth monotonic functions [7] (always increasing or always decreasing) make it easy to calculate the gradient and minimize cost. Image from Andrew Ng’s slides on logistic regression [1].

REF:

- https://ml-cheatsheet.readthedocs.io/en/latest/loss_functions.html#loss-cross-entropy

- https://teddykoker.com/2019/06/multi-class-classification-with-logistic-regression-in-python/ (**************)

- https://medium.com/@jjw92abhi/is-logistic-regression-a-good-multi-class-classifier-ad20fecf1309 (************)

- https://teddykoker.com/2019/06/multi-class-classification-with-logistic-regression-in-python/ (multi class logistic regression python code)

multiple classification for Logistic regression

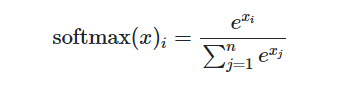

Multinomial

logistic regression is a form of logistic regression used to predict a

target variable have more than 2 classes. It is a modification of

logistic regression using the softmax function instead of the sigmoid

function the cross entropy loss function. The softmax function squashes

all values to the range [0,1] and the sum of the elements is 1.

https://medium.com/@jjw92abhi/is-logistic-regression-a-good-multi-class-classifier-ad20fecf1309 (Multinomial Logistic Regression)

No comments:

Post a Comment