Book name:

- http://www.deeplearningbook.org/ (refereed by Tanvir san)

REF:

- https://medium.com/deep-learning-demystified/generalization-in-neural-networks-7765ee42ac23 (basic of NN)

- https://medium.com/datadriveninvestor/the-basics-of-neural-networks-304364b712dc (basic of NN)

- https://gadictos.com/neural-network-pt1/ (back propagation)

- https://towardsdatascience.com/lets-code-a-neural-network-in-plain-numpy-ae7e74410795 (Given by tanvir san)

- http://cs231n.github.io/neural-networks-case-study/ (Given by rossi san and practice in ai class with palash ***************)

- https://medium.com/binaryandmore/beginners-guide-to-deriving-and-implementing-backpropagation-e3c1a5a1e536 (back propagation mathematical term ************ vVVVIIIIIIIIIII)

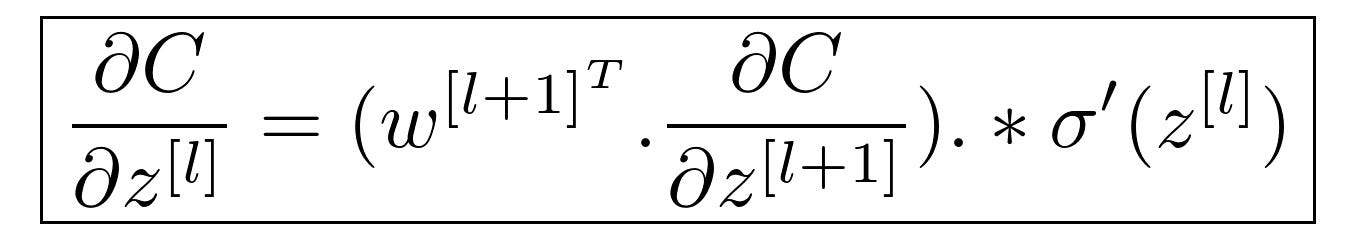

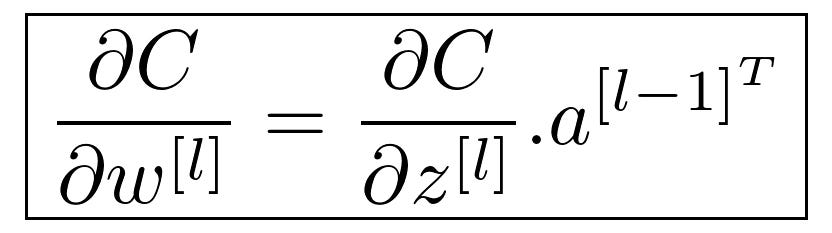

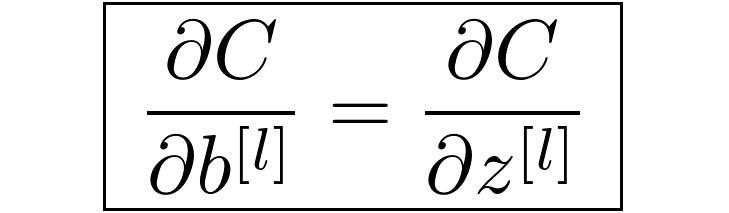

- There are four equation need to understand the back propagation

- last layer derivative or change calculation for activation

- Hidden layer derivative or change calculation for activation

Differential w.r.t to w

- Differential wrt to babove equation based on Andrew Ng videoWE need to care about NN sign to understand the equation. Andrew ng and midium NN presention(W,Z, layer lonation different) different presentatin.

- https://math.stackexchange.com/questions/945871/derivative-of-softmax-loss-function (derivative of softmax funtion)

No comments:

Post a Comment